We, the community around the shogun.ml [1] open-source machine learning library, are looking for a developer for a paid 6 month pilot project (October 18 – March 19) on improving meta-learning capabilities (openml, coreml). The ideal candidate is a highly motivated MSc/PhD/postdoc with the desire to get involved in the open-source movement, who

- is based on London

- is able to start working in October

- is flexible enough to spend full-time or at least 50% on the project

- has a background in designing software in C++ (gcc, valgrind, C++11, etc)

- (optional) has knowledge of openml [2] and coreml [3]

- (optional) has experience with build management and dev-ops tools (git, cmake, travis, buildbot, linux, docker, etc)

- (optional) has experience in computational sciences (ml, stats, etc)

- (optional) has contributed to open-source before

The project is funded by with the Alan Turing institute, and at least part of the work will be located there. You will be supervised by the Shogun core development team, partly in person and partly remotely. This is a great opportunity to get involved in one of the oldest ML libraries out there, getting your hands dirty on a huge code-base, and tipping into the open-source community.

The project is currently in planning stage. After a successful pilot, there is the option for an extension. If you are interested, please get in touch via the developers, the mailing list, or even better, read how to get involved [4] and send us a pull-request for an entrance task [5] on github. See our website for contact details.

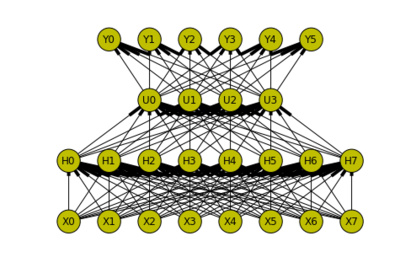

Shogun is a library aiming to offer unified and efficient machine learning methods. Its core is written in C++ and it interfaces to a large number of modern computing languages. The Shogun community is vibrant, diverse, and international. Shogun is a fiscally sponsored project of NumFOCUS, a nonprofit dedicated to supporting the open source scientific computing community.

[1] http://shogun.ml/

[2] https://www.openml.org/

[3] https://github.com/apple/coremltools

[4] https://github.com/shogun-toolbox/shogun/wiki/Getting-involved

[5] https://github.com/shogun-toolbox/shogun/issues

[6] https://numfocus.org/

shogun

DS3 summer school in Paris

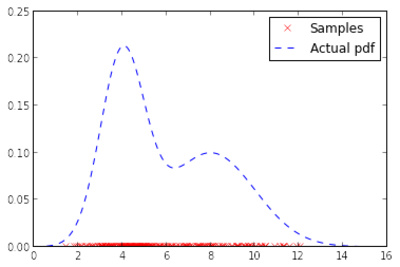

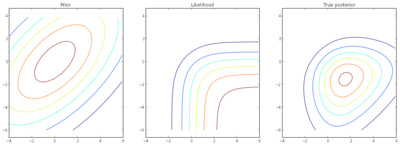

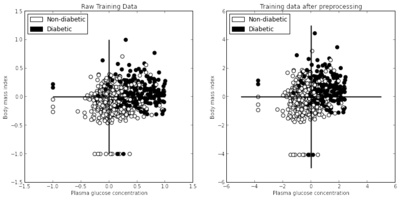

I had the pleasure to run a (actually two) practical on “representing and comparing probabilities using kernels” at the DS3 summer school at the Polytechnique in Paris, following a lecture by Arthur Gretton. Thanks to Zoltan Szabo for organising the session.

We covered the implementation basics of two-sample testing, independence testing, and goodness-of-fit testing, with examples including testing the quality of GAN samples, detecting dependence across translated documents, and more. I even managed to sneak Shogun into the practical 😉 Good fun overall!

Shogun participates in GSoC 2018

We are happy to announce that Shogun’s umbrella organisation, NumFOCUS, has been accepted for GSoC 2018 — and we will be mentoring through them!

If you are a talented student who wants to spend a summer hacking open-source, make sure to apply! Check out the NumFOCUS GSoC ideas page (contains Shogun’s and other projects’ ideas). Our very own ideas page can also be found in our wiki.

GSoC is a global program focused on bringing more student developers into open source software development. Students work with an open source organization on a 3 month programming project during their break from school.

Shogun provides efficient implementations of standard and state-of-the-art machine learning algorithms in an accessible, open-source environment. Shogun has been involved in GSoC since 2011, check out our blog posts about past years.

NumFOCUS is a nonprofit that is dedicated to support and promote world-class, innovative, open source scientific computing.

Shogun now supports Intel MKL

Over the last few weeks and months, a few things came together that make Shogun both a lot easier to install, and a lot faster!

EDIT: While I was writing this post, Viktor leaked some of the results. I should work faster 😉

basic runtime performance comparison of various models on benchmark dataset of the new @ShogunToolbox release vs @scikit_learn #sklearn pic.twitter.com/FJeANfmo0e

— Viktor Gal (@vikgl) December 1, 2017

Easier installation: conda integration & windows

Thanks to Dougal, who did an awesome job of integrating shogun with conda, installing Shogun is now as easy as

[bash]conda install -c conda-forge shogun[/bash]

Viktor recently made this work under windows as well (not easy! yet only C++ interface, but this will change soon). Check his StackOverflow post if you want to give it a try. After the years and years of cryptic installation procedures these things hopefully make Shogun more accessible for new users. Thanks again Dougal!

Faster Shogun: Lapack, Eigen3 and Intel MKL

How did we make Shogun faster? Let’s take a little peak under the hood!

For fast Machine Learning algorithms, we need well-tuned implementations for linear algebra operations. One commonly used set of tools is LAPACK/BLAS: the BLAS standard is set of low-level routines for performing basic linear algebra operations is; LAPACK is a set of routines for slightly more complex operations (e.g. matrix factorisations) on top of BLAS.

If we had an Intel CPU, we could use Intel’s math kernel library (MKL), a LAPACK/BLAS suite optimised for Intel CPUs. (for an example, check out the benchmarks of MKL-anaconda vs standard anaconda). However, since it is proprietory software it used to be hard to get a copy without having to pay. So when Anaconda recently started shipping a free version of MKL with their Python distribution, Viktor got to work to harness MKL for Shogun.

While historically using LAPACK/BLAS in many places (mostly with openblas), the recent Shogun has a flexible linear algebra backend which heavily uses Eigen3, a header-only, template based C++ implementation of a lot of linear algebra. Eigen3 claims to be at least as fast as most free and non-free LAPACK/BLAS suites. BUT Eigen3 lacks parallel implementations of many matrix operations. This is crucial for many ML algorithms. On the other hand, MKL has those parallel implementations, so we want to use them.

How does it work? Luckily, it is possible to compile Eigen3 code against MKL. Then MKL acts as a drop-in replacement within Shogun’s Eigen3/openblas backend. Long story short, let’s compare Shogun’s algorithms with Eigen3+openblas against the Eigen3+MKL version. Good news aside: Viktor recently set up a proper LAPACK detection in our cmake setup which makes everything work out of the box.

On a side note: I actually first found out about this when writing a paper on fast MMD implementations, where we compared an Eigendecomposition approach (MKL has multi-core versions!) with our own codes. Though we managed to beat it 🙂

Compilig Shogun using Docker

To compare the performance of Shogun using MKL vs Eigen3/openblas, we need to have a Shogun version that links against each of them. The easiest way to get this into place — in a way that anyone reading this post could reproduce — is using a Docker container. If you install Shogun using conda (see above), the openblas version is downloaded, so in this case we want to compile from scratch.

I start from the official anaconda image, which currently is a Debian jessie. I download the image, fire up a container with it, and finally start a bash (make sure to read up on containers vs images).

[bash]

sudo docker pull continuumio/anaconda3

sudo docker run -i -t continuumio/anaconda3 /bin/bash

[/bash]

Installing dependencies

I want to compile Shogun, so I need a compiler and the C++ library (which are not part of the image). I also use a compiler cache that speed’s up compiling Shogun.

[bash] apt-get install -qq –force-yes –no-install-recommends make gcc g++ libc6-dev ccache [/bash]

Next, since I want to use Shogun from Python, I need swig to generate bindings to Shogun’s C++ core. Unfortunately, the current swig version in Debian jessie is too old (3.0.2) for Shogun, which needs at least 3.0.5. The same is true for cmake. But using conda makes updating those straight-forward:

[bash]

conda install swig cmake

[/bash]

Ok, we need one more thing: anaconda comes with its shiny new MKL and Shogun’s Eigen3 will be compiled against it. The compiler therefore needs the MKL header files:

[bash] conda install mkl-include [/bash]

Using Shogun without MKL (optional)

If you wanted to use Shogun’s non-MKL version, you could just install a precompiled binary version of Shogun using conda. If you want to however, compare the manually compiled versions with this installation, you would need to make conda forget about MKL (which installs openblas instead). This causes all MKL optimised packages to be re-installed (numpy, sklearn, etc). In addition, the blas header files are needed.

[bash]conda install -c anaconda nomkl

apt-get install libopenblas-dev[/bash]

Most people will skip this step.

Compiling the source code

Let’s download Shogun’s latest source code (development version after our new 6.1.2 release).

[bash] cd /opt/

git clone https://github.com/shogun-toolbox/shogun.git [/bash]

Let’s configure the beast. There is some options I set here: disable GPL codes & examples (which take time to compile) and disable xml serialization (which has some funny errors in this setup). More importantly, I set the (install-)prefix to the conda distribution of the anaconda image.

[bash] cd shogun

mkdir build

cd build

cmake .. -DINTERFACE_PYTHON=On -DLICENSE_GPL_SHOGUN=Off -DUSE_SVMLIGHT=Off -DBUILD_META_EXAMPLES=Off -DBUILD_EXAMPLES=Off -DENABLE_LIBXML2=Off -DCMAKE_PREFIX_PATH=/opt/conda -DCMAKE_INSTALL_PREFIX=/opt/conda [/bash]

Compile and install

[bash] make -j 4

make install [/bash]

Let’s check that Shogun and its Python bindings do reference to either MKL or openblas. You can do that with

[bash] ldd /opt/conda/lib/libshogun.so | grep ‘mkl\|blas’

ldd /opt/conda/lib/python3.6/site-packages/_shogun.so | grep ‘mkl\|blas’ [/bash]

For the procedure I outlined in this post, you should see something like

[bash]libmkl_rt.so => not found[/bash]

Nevermind the “not found”, which is related to a broken ld setup in the anaconda image. Shogun sorts this out for you. The point is that there is either MKL or openblas. If you removed all the MKL packages first and installed openblas instead, it should be in the lines of

[bash]libopenblas.so.0 => /usr/lib/libopenblas.so.0 (0x00007f38e6eac000)[/bash]

Comparing runtimes

I use a very simple code snippet to compare runtime of two Shogun algorithms: linear regression and PCA, both on random data, see below. Both of them are based on a matrix factorisation, where the multi-threaded MKL implementation can shine.

Here are the walltimes (from a single run). I have a X1 Carbon Thinkpad with an Intel i7-7500U CPU, which has 2 cores and 4 threads.

| Openblas | MKL | |

|---|---|---|

| Linear regression | 7.61 s | 2.09 s |

| PCA | 23 s | 12.6 s |

Pretty epic difference, especially given that this comes essentially for free. When running the benchmark and monitoring my CPU, I was surprised to see that openblas actually uses all four system threads, while MKL only uses two (it prefers it that way) That is what I call efficient!

It is also very interesting that in Viktor’s tweet above, Shogun with MKL can be quite a bit faster than sklearn. There is a lot of things to be benchmarked here: for example, in contrast to sklearn, our SVM solvers are accelerated through MKL as well, as we ported the code to using our linear algebra backend.

Conclusions

BLAS/LAPACK is a complicated topic! One take-away for me is that it is worth reading a bit about those things, as they do make a big difference.

A next step is to benchmark everything properly, using the benchmark framework by Marcus and Ryan from MLPack. In particular, I am curious how Shogun+MKL will then do compared to other ML libraries.

We should probably also make Shogun’s binary distributions (at least the one on conda) include an MKL build by default. For that, Shogun would have to move to the conda default channel, as conda-forge cannot have MKL. And for that, we need a BSD compatible release (currently Shogun is licensed under the viral GPL), which is in the making for a while now (and almost done).

Appendix: Shogun Linear regression code

[python]

import shogun as sg

import numpy as np

N = 30000

N_test = 300000

D = 1500

features_train = sg.RealFeatures(np.random.randn(D, N))

features_test = sg.RealFeatures(np.random.randn(D, N_test))

labels_train = sg.RegressionLabels(np.random.randn(N))

labels_test = sg.RegressionLabels(np.random.randn(N_test))

tau = 0.001

lrr = sg.LinearRidgeRegression(tau, features_train, labels_train)

%time lrr.train(); lrr.apply_regression(features_test)

[/python]

Appendix: Shogun PCA code

[python]

import shogun as sg

import numpy as np

N = 30000

N_test = 300000

D = 1500

D_target = 20

features_train = sg.RealFeatures(np.random.randn(D, N))

features_test = sg.RealFeatures(np.random.randn(D, N_test))

labels_train = sg.RegressionLabels(np.random.randn(N))

labels_test = sg.RegressionLabels(np.random.randn(N_test))

preprocessor = sg.PCA()

preprocessor.set_target_dim(D_target)

%time preprocessor.init(features_train); preprocessor.apply_to_feature_matrix(features_test)

[/python]

Google Summer of Code 2016

Great news: Shogun just got accepted to the GSoC 2016. After our break year in 2015, we are extremely excited to continue our GSoC tradition starting in 2011 (when I first joined Shogun).

If you are a student and wish to spend the summer hacking Machine Learning, guided by a vibrant international community of academics, professionals, and NERDS, then pay us a visit. Oh, and you will receive a cheque over $5000 from Google.

This year, we focus on framework improvements rather than solely adding new algorithms. Consequently, most projects have a heavy focus on packaging and software engineering questions. But there will be Machine Learning too. We are aiming high!

Check our our ideas list and read how to get involved.

Shogun 4.0 and GSoC 2014 follow up

No, this is not about Fernando’s and mine honeymoon …

The Shogun team just released version 4.0 of their community driven Machine Learning toolbox. This release most of all features the work of our 8 Google Summer of Code 2014 students, so this blog post is dedicated to them — you guys rock. This also brings an end to yet another very active year of Shogun: we organised a second workshop in Berlin, and I presented Shogun in to the public in London, New York and Berlin.

For the 4th time, Shogun participated in Google’s wonderful program which more than anything boosted the team’s size and motivation. What else makes people spend sleepless nights hunting bugs for the sake of Machine Learning for everyone? This year was the first time that I organised our participation. This ranged from writing the application last second, harrassing potential mentors until they say ‘yes’, to making up overly ambitious projects to scare students away, and ending up mentoring too many students on my own. Jokes aside, this was a very challenging (in particular time-wise) but also a very rewarding experience that definitely sharpened my project organisation skills. As in the previous year, I tried to fuse my scientific life and Shogun’s GSoC participation — kernel methods and variational learning is something I touch on a daily basis at Gatsby. Many mentors were approached after having met them at scientific Machine Learning conferences, and being exposed to ML on for some years now, it is also easier to help students implement and write about popular ML algorithms.

Here is a list. (Note that all projects come with really nice IPython notebooks — something that we continued to insist on from last year.)

Fundamental ML algorithms by Parijat Mazumdar (parijat). Mentor: Fernando

Shogun needs more standard ML algorithms. Parijat implemented some of those: random forests, kernel density estimation and more. Parijat’s code quality is amazing and together with Fernando’s superb mentoring skills (his first time mentoring), this project is likely to have been very sustainable.

Notebook random forest, notebook KDE.

Kernel testing and feature selection by Rahul De (lambday). Mentor: Dino Sejdinovic, Heiko

Previous year’s student lambday continued to rock. First, he massively extended my 2012 project on kernel hypothesis testing to Big Data land. Dino, who was one of the invited speakers in the Shogun workshop last summer, and I are actually working on a journal article where we will use this code. Second, he extended the framework to perform feature selection via dependence measures. Third, he initiated and guided development of a framework for unifying Shogun’s linear algebra operations. This for example can be used to change existing algorithms from CPU to GPU with a compile switch — useful also for our deep learning project.

Variational Inference for Gaussian Processes by Wu Lin (yorkerlin). Mentor:Heiko, Emtiyaz Khan

In our third GSoC project on GPs, Wu took a couple of state-of-the-art approximate variational inference methods developed by Emiyaz, and put them into Shogun’s framework. The result of this very involved and technical project is that we now have large-scale classification using GPs. Emtiyaz also was a speaker at ourworkshop.

Notebook

Shogun missionary by Saurabh. Mentor:Heiko

The idea of this project was to showcase Shogun’s abilities — sometimes we definitely need to work on. Saurabh wrote a couple of Notebooks that are essentially ML tutorials using Shogun. If you want to know about ML basics, regression, classification, model-selection, SVMs, multiclass, multiple kernel learning this was for you. He also extended our web-demo framework to for example include model-selection for GPs.

OpenCV integration by Kislay. Mentor: Kevin

Kislay, after writing a very cool notebook on PCA for his application, wrote data-structures to bridge between Shogun and OpenCV. The project was supervised by Kevin, who is also one of our former GSoC students This makes it possible to use the too libraries together in a neat way.

Deep learning by Khaled Nasr. Mentor: Theofanis, Sergey

The hype is on! After NIPS, Facebook, GoogleDeepmind, Shogun now also joined 😉 Khaled did a very good job in coding up the standard ones, and was involved in generalising Shogun’s linear algebra on the fly with lambday. This is a project that is likely to have a second part. Check his superb notebooks on deep belief neural networks, convolutional networks,autoencoders, restricted Boltzman machines

SO Learning with Approximate Inference by Jiaolong. Mentor: hushell, Thoralf

This was another project that was (co-)mentored by a former GSoC student. With the help his mentors, Jialong implemented various approximate inference methods for structured output (SO) models. Check out his notebook.

Large-Scale Multi-Label Classification by Abinash. Mentor: Thoralf

Another project involving our structured output expert Thoralf as mentor. Abinash implemented large-scale multilabel learning — beating scikit learn‘s implementation both in runtime and accuracy. The last experiment is described in this notebook.

Finally, we sent two of our delegates (Thoralf and Fernando) to the 10th year jubilee mentor summit in California in late October. Really cool: I got lucky and won Google’s lottery on some extra places, so I could also join. The summit once again was overly colourful, bursting with creative minds who have the most diverse set of opinions and approaches, but who are all united by their excitement about open-source. The beauty of this community to me really lies in the people who do work purely driven by their interest on *the thing itself*, independent of competitive and in particular commercial interests — sometimes almost to an extend that is beyond any form of compromise. A wonderful illustration of this was when at the mentor summit, during the reception in the Tech museum in San Jose: Google’s speaker and head of finance Patrick Pichette (disclaimer: not sure, don’t quote me on this) who is the boss of Chris DiBona, who himself organises the GSoC, searched to inspire the audience to “think BIG” and to “change the lifes of GSoC students”. Guest speaker Linus Torvalds 10 minutes later then contemplates that he could not be a GSoC mentor as he would scare people away and that the best way to get involved in open-source is to “start small” — a sentence after which P.P. left the room. Funny enough: in GSoC, this community is then hugged by a super capitalistic American internet company — and gladly lets it happen: we all love GSoC and Shogun certainly would not be where it is without it. I also want to mention the day Google rented a whole theme park for us nerds — which made Fernando try a roller-coaster for the first time after being pushed by MLPack maintainer Ryan and myself. After being horrified at first, he even started to talk about C++ the second or third time.

As you would expect from attending such geeky meetings, Thoralf, Fernado, and I also spent quite some time hacking Shogun, discussing ideas until late night (of course getting emotional about them 🙂 ). I managed to take a picture of Fernando falling asleep while hacking Shogun’s modular interfaces. Some of those ideas are collected on our wiki.

- Improve usability

- Making Shoun more modular and slim

- Improving Shogun’s efficiency

Some of those ideas are also part of our theme for our GSoC 2015 application and our planned Hackathon. We have come to a point where we seriously need to focus on application and stability rather than adding more and more cutting-edge algorithms — Shogun’s almost 15 year old framework needs a face lift. GSoC students will see that this years project ideas will focus on cleaning up the toolbox and implement ML applications.

Meet the Shogun/MLPack crew, as nerdy as it gets 😉

Shogun in NYC

In late August, I was invited to NYC to present Shogun at an open-source Machine Learning software workshop (link), organised by John Langford. Seeing Shogun being recognised as a major player along with big ML/stats libraries like Theano, Stan, Torch, LibLinear, VowpalWabbit, etc really got my excited.

I talked to most of the other project’s developers and a few very interesting possible collaborations came up. For example, Shogun’s unique way to automagically generate interfaces to most computing languages via swig. It has been in our pipeline of ideas for a long time to pull this functionality out of Shogun, and offer it to other projects in a modular way. Sergey has put together (link) a simple prototype here and will continue to work on this soon.

Another thing is that we would like to integrate some (fixed) models from Stan into Shogun, for example to complement our collection of variational inference methods for Gaussian Processes with a full blown MCMC based approach.

While talking to Gunnar Raetsch, we had the idea to host a hackfest where we bring together all Shogun core-developers for a week, working on more sophisticated projects that are not suitable for Google Summer of Code. A generic framework for parallelising/distributing algorithms in Shogun would be a first idea, extending ideas from lambday’s GSoC 2013 project. This would again also be useful for other ML libraries and I in fact talked to John Langford about using ideas from VowpalWabbit here. We made a list of ideas (link) for such a hack and are currently trying to get funding for it.

The meeting was video-taped and I will put links for this soon.

Shogun workshop 2014

Another super nice event that happened in Berlin in July was the second Shogun workshop that me and the Shogun team organised. We had a hands-on session and a main workshop day full of talks, cool people, and lots of Machine Learning. It is a great pleasure working with the Shogun team, and I am very happy that we were able to push this year’s workshop through.

I talked a bit about what Shogun is and what it tries to be, and gave some directions for future work. If you missed the event, we got full video coverage!

Shogun at EuroPython 2014

It’s already a while ago, in July, I presented Shogun at the EuroPython in Berlin. This was a super nice conference full of interesting people and projects. My talk was recorded and can be found [here], slides are [here].

Another super interesting project was presented by Thomas Wiecki: Probabilistic programming with PyMC3 [video]. This is a very interesting project, a bit similar to Stan, which allows to plug together probabilistic models and then do HMC for inference, powered by automatic differentiation (which I am currently super excited about). Unlike Stan, this happens all in Python which makes things super comfortable (example notebook). As I am working on kernel-based samplers (Kameleon MCMC & soon more), this might be a good vehicle to make them public, exploiting the nice auto-diff tool (Theano) that PyMC uses.

GSoC Interview with Sergey and me

Sergey and me gave an interview on Shogun and Google Summer of Code. Here it is:

The internet. More specifically #shogun on irc.freenode.net. Wasn’t IRC that thing that our big brothers used as a socialising substitute when they were teenagers back in the 90s? Anyways. We are talking to two of the hottest upcoming figures in machine learning open-source software, the Russian software entrepreneur Sergey Lisitsyn, and the big German machine Heiko Strathmann.

Hi guys, glad to meet you. Would you mind introducing yourself?

Sergey (S): Hey, I am Sergey. If you ask me what do I do apart from Shogun – I am currently working as a software engineer and finishing my Master’s studies at Samara State Aerospace University. I joined Shogun in 2011 as a student and now I am doing my best to help guys from the Shogun team to keep up with GSoC 2014.

Heiko (H): Hej, my name is Heiko. I do a Phd in Neuroscience & Machine Learning at the Gatsby Institute in London and joined Shogun three years ago during GSoC. I love open-source since my days in school.

Your project, Shogun, is about Machine Learning. That sounds scary and sexy, but what is it really?

H: My grandmother recently sent me an email asking about this ‘maschinelles Lernen’. I replied it is the art of finding structure in data in an automated way. She replied: Since when are you an artist? And what is this “data”? I showed her the movie PI by Darren Aronofsky where the main character at some point is able to predict stock prices after realising “the pattern”, and said that’s what we want to do with a computer. Since then, she is worried about me because the guy puts a drill into his head in the end….. Another cool application is for example to model brain patterns to allow people to learn how to use a prosthesis faster.

S: Or have you seen your iPhone detects faces? That’s just a Support Vector Machine (SVM). It employs kernels which are inner products of non-linear mappings of Haar features into a reproducing kernel Hilbert Space so that it minimizes ….

Yeah, okok… What is the history of Shogun in the GSoC?

S: The project got started by Sören in his student days around 15 years ago. It was a research only tool for a couple of years before being made public. Over the years, more and more people joined, but the biggest boost came from GSoC…

H: We just got accepted into our 4th year in that program. We had 5+8+8 students so far who all successfully did the program with us. Wow I guess that’s a few million dollars. (EDITOR: actually 105,000$.) GSoC students forced Shogun to grow up in many ways: github, a farm of buildbots, proper unit-testing, a cloud-service, web-demos, etc were all set up by students. Also, the diversity of algorithms from latest research increased a lot. From the GSoC money, we were able to fund our first Shogun workshop in Berlin last summer.

How did you two got into Shogun and GSoC? Did the money play a role?

H: I was doing my undergraduate project back in 2010, which actually involved kernel SVMs, and used Shogun. I thought it would be a nice idea of putting my ideas into it — also I was lonely coding just on my own. 2010, they were rejected from GSoC, but I eventually implemented my ideas in 2011. The money to me was very useful as I was planning to move to London soon. Being totally broke in that city one year later, I actually paid my rent from my second participation’s stipend – which I got for implementing ideas from my Master’s project at uni. Since 2013, I mentor other students and help organising the project. I think I would have stayed around without the money, but it would have been a bit tougher.

S: We were having a really hard winter in Russia. While I was walking my bear and clearing the roof of the snow, I realised I forgot to turn off my nuclear missile system…..

H: Tales!

S: Okay, so on another cold night I noticed a message on GSoC somewhere and then I just glanced over the list of accepted organizations and Shogun’s description was quite interesting so I joined a chat and started talking to people – the whole thing was breathtaking for me. As for the money – well, I was a student and was about to start my first part-time job as a developer – it was like a present for me but it didn’t play the main role!

H: To make it short: Sergey suddenly appeared and rocked the house coding in lightspeed, drinking Vodka.

But now you are not paid anymore, while still spending a lot of time on the project. What motivates you to do this?

S: This just involves you and you feels like you participate in something useful. Such kind of appreciation is important!

H: Mentoring students is very rewarding indeed! Some of those guys are insanely motivated and talented. It is very nice to interact with the community with people from all over the world sharing the same interest. Trying to be a scientist, GSoC is also very useful in producing tools that myself or my colleagues need, but that nobody has the time to build properly. You see, there are all sorts of synergic effects in GSoC and my day-job at university, such as meeting new people or getting a job since you know how to code in a team.

How does this work? Did you ever publish papers based on GSoC work?

S: Yeah, I actually published a paper based on my GSoC 2011 work. It is called ‘Tapkee: An Efficient Dimension Reduction Library’ and was recently published in the Journal of Machine Learning Research. We started writing it up with my mentor Christian (Widmer) and later Fernando (Iglesias) joined our efforts. It took enormous amount of time but we did it! Tapkee by the way is a Russian word for slippers.

H: I worked on a project on statistical simulation of global ozone data last year. The code is mainly based on one of my last year’s student’s project – a very clever and productive guy from Mumbai who I would never have met without the program, see http://www.ucl.ac.uk/roulette/ozoneexample

So you came all the way from being a student with GSoC up to being an organisation admin. How does the perspective change during this path?

H: I first had too much time so I coded open-source, then too little money so I coded open-source, then too much work so I mentor people coding it open-source. At some point I realised I like this stuff so much that I would like to help organising Shogun and bring together the students and scientists involved. It is great to give back to the community which played a major role for me in my studies. It is also sometimes quite amusing to get those emails by students applying, being worried about the same unimportant things that I worried about back then.

S: It seems to be quite natural actually. You could even miss the point when things change and you became a mentor. Once you are into the game things are going pretty fast. Especially if you have full-time job and studies!

Are there any (forbidden) substances that you exploit to keep up with the workload?

S: It would sound strange but I am not addicted to vodka. Although I bet Heiko is addicted to beer and sausages.

H: Coffeecoffeecoffeee…… Well, to be honest GSoC definitely reduces your sleep no matter whether you are either student, mentor, or admin. By the way, our 3.0 release was labelled: Powered by Vodka, Mate, and beer.

Do you crazy Nerds actually ever go away from your computers?

H: No.

S: Once we all met at our workshop in Berlin – but we weren’t really away from our computers. Why on earth to do that?

Any tips for upcoming members of the open-source community? For students? Mentors? Admins?

H: Students: Do GSoC! You will learn a lot. Mentors: Do GSoC! You will get a lot. Admins/Mentors: Don’t do GSoC, it ruins your health. Rather collect stamps!

S: He is kidding. (whispers: “we need this … come on … just be nice to them”)

H: Okay to be honest: just have fun of what you are doing!

Due to the missing interest in the community, Sergey and Heiko interviewed themselves on their own.

Shogun: http://www.shogun-toolbox.org

GSoC 2013 blog: http://herrstrathmann.de/shogun-blog/110-shogun-3-0.html

GSoC 2014 ideas: http://www.shogun-toolbox.org/page/Events/gsoc2014_ideas

Heiko: http://herrstrathmann.de/

Sergey: http://cv.lisitsyn.me/