Last week, I was again very busy with exams and doing experiments for a NIPS submission.

The latter is somehow related to my GSoC project, and I will implement it once the other stuff is done:

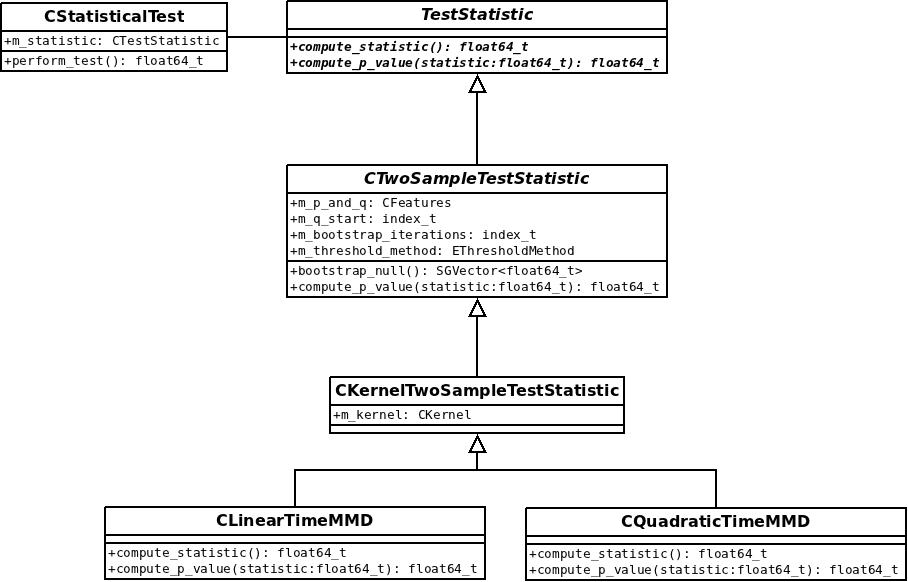

We developed a method for selecting optimal coefficients of a non-negative combination of kernels for the linear time (=large scale) MMD-two-sample test. The criterion that is optimised for is the ratio of the linear MMD \(\eta_k\) by its standard deviation \(\sigma_k \), i.e.

\[ k_*=\arg \sup_{k\in\mathcal{K}} \eta_k \sigma_k^{-1}\]. That is equivalent to solving the quadratic program

\[

\min \{ \beta^T\hat{Q}\beta : \beta^T \hat{\eta}=1, \beta\succeq0\}

\]

where the combination of kernels is given by

\[

\mathcal{K}:=\{k : k=\sum_{u=1}^d\beta_uk_u,\sum_{u=1}^d\beta_u\leq D,\beta_u\geq0, \forall u\in\{1,…,d\}\}

\]

\(\hat{Q}\) is a linear time estimate of the covariance of the MMD estimates and \(\hat{\eta}\) is a linear time estimate of the MMD using the above kernel combinations.

Apart from that, I implemented a method to approximate the null-distribution of the quadratic time MMD, which is based on the Eigenspectrum of the kernel matrix of the merged samples from the two distributions, based on [1]. It still needs to be compared against the MATLAB implementation. It comes with some minor helper functions around matrix algebra.

This week, I will finally have my last exam and then continue on the advanced methods for computing test thresholds.

[1]: Gretton, A., Fukumizu, K., & Harchaoui, Z. (2011). A fast, consistent kernel two-sample test.

I participated in the GSoC 2011 for the SHOGUN machine learning toolbox (

I participated in the GSoC 2011 for the SHOGUN machine learning toolbox (